The EU AI Act Footnotes (3)

Have you noticed how legal definitions create odd boundaries?

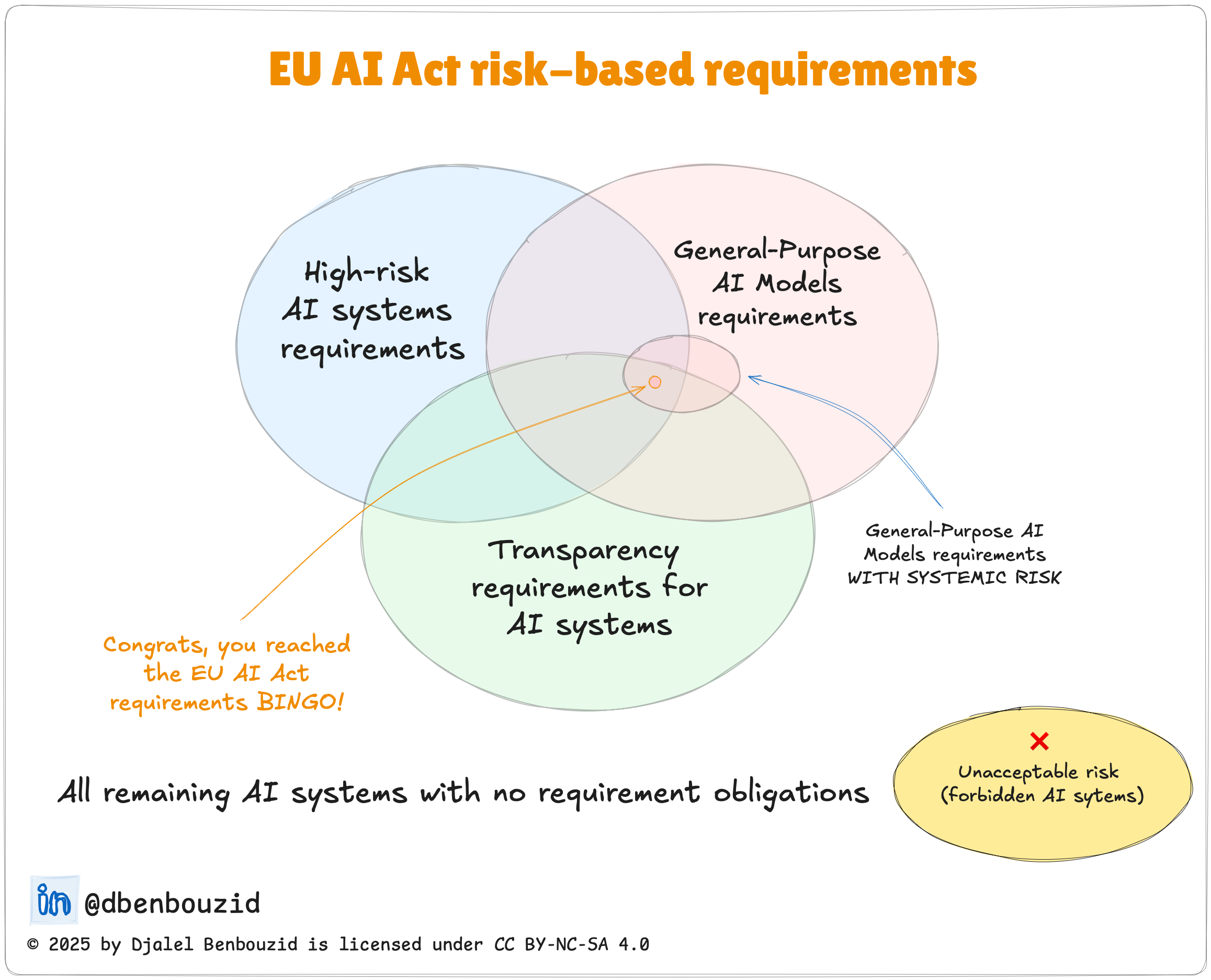

The EU AI Act defines a “general-purpose AI model” as “an AI model […] that is trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable of competently performing a wide range of distinct tasks.”

But here’s something interesting: sometimes the exact same task can be performed by models that fall on opposite sides of this definition. Take image segmentation. You could use a GPAI model like a large multimodal system to segment images. But you could also just download a specialized model from Huggingface that does nothing but image segmentation. Same task. Different legal categories.

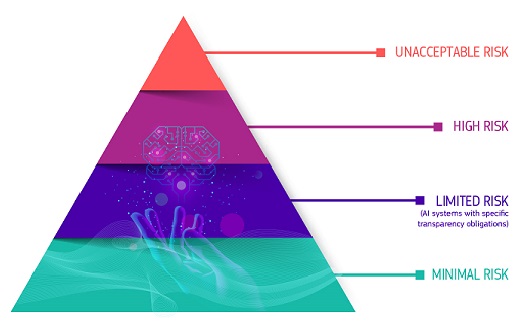

This distinction matters when you’re cataloging your AI systems and classifying their risks for AI Act compliance. Two systems doing identical work might have completely different compliance requirements. The map is not the territory, but in this case, the map determines your legal obligations.

PS: Random observations on AI regulation. Not legal advice.