The EU AI Act: A Catalyst for Responsible Innovation¶

When I first heard about the EU AI Act, I had the same reaction many in tech did: here comes another regulatory burden. But as I’ve dug deeper, I’ve come to see it differently. This isn’t just regulation; it’s an opportunity to reshape how we approach AI development.

Let’s face it: we’re in the midst of an AI hype cycle. Companies are making grand promises about AI capabilities, setting expectations sky-high. But as anyone who’s worked in tech knows, reality often lags behind the hype. We’re already seeing the fallout: users disappointed by AI that doesn’t live up to the marketing, trust eroding as quickly as it was built.

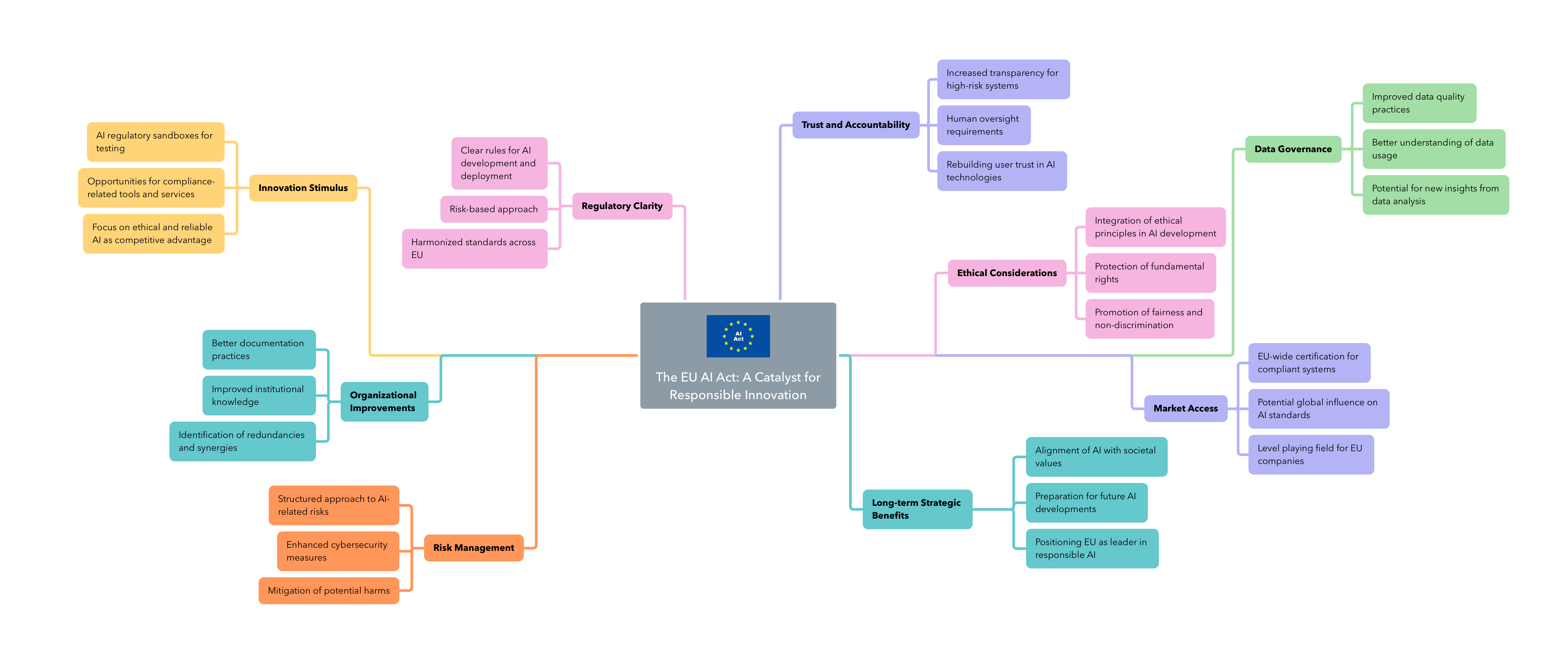

The AI Act might be just what we need to reset this dynamic. By pushing for transparency and accountability, it gives us a chance to rebuild trust on a more solid foundation. Instead of chasing the next big headline, we can focus on creating AI that genuinely delivers value and earns users’ confidence.

Critics worry that the Act will stifle innovation, particularly for smaller companies. But look closer, and you’ll see that the most stringent requirements are focused on high-risk systems. For many AI applications, the regulatory burden will be light. And even for high-risk systems, the costs of compliance should be a fraction of overall development expenses.

Take healthcare, for instance. It’s a sector where AI could have enormous impact, but also where the stakes are incredibly high. Yet many healthcare companies are finding that they’re already well-positioned to comply with the AI Act. Why? Because they’re used to operating in a highly regulated environment. The processes and safeguards required by the Act often align with existing best practices in the industry.

As someone responsible for defining an AI Governance framework for a major automotive group, I’m seeing firsthand how the Act is driving positive change. Yes, there’s work involved in ensuring compliance. But the process of cataloging our AI systems, assessing risks, and standardizing documentation is already yielding benefits.

We’re uncovering redundancies we didn’t know existed. We’re identifying potential synergies between teams that were working in silos. Most importantly, we’re building a shared understanding of what responsible AI development looks like. These aren’t just compliance exercises, they’re making us better at what we do.

The Act is pushing us to think more deeply about the societal impact of our work. It’s easy to get caught up in the technical challenges of AI and lose sight of the bigger picture. But when you’re forced to assess potential harms and put safeguards in place, you start asking important questions. Who might be affected by this system? What could go wrong? How can we make this more robust and fair?

These aren’t just ethical considerations, they’re core to building AI that people will actually want to use and trust over the long term.

There’s also a hidden opportunity here for startups and smaller companies. As larger organizations grapple with compliance, there will be a growing need for tools and services to help navigate the new regulatory landscape. From AI asset management systems to specialized testing tools, there’s a whole new market emerging.

The collaborative spirit in the tech community is also encouraging. We’re already seeing experts share insights on compliance strategies and work on open-source documentation templates. This kind of knowledge-sharing could significantly reduce the burden on individual companies, especially smaller ones.

None of this is to say that compliance will be easy. There will be challenges, particularly in the early days as we all figure out how to interpret and apply the new rules. But I’m convinced that the long-term benefits, for companies, for users, and for society, will far outweigh the short-term costs.

The EU AI Act is pushing us towards a more mature, responsible approach to AI development. It’s an opportunity to move past the hype and build AI systems that are not just powerful, but trustworthy and aligned with societal values.

For companies willing to embrace this challenge, the rewards could be significant. We have a chance to shape the future of AI in a way that creates lasting value and earns genuine trust. That’s an opportunity we can’t afford to miss.